The following was posted on Medium by Paul Bakaus, AMP Developer Advocate, Google.

Caches are a fundamental piece of the Accelerated Mobile Pages (AMP) Project, yet one of the most misunderstood components. Every day, developers ask us why they can’t just get their AMP pages onto some AMP surfaces (e.g. Google) without linking through the cache. Some worry about the cache model breaking the origin model of the web, others worry about analytics attribution and canonical link sharing, and even others worry about their pages being hosted on servers out of their control. Let’s look at all of these, and understand why the caches exist.

While AMP Caches introduce some trade-offs, they do work in the user’s favor to ensure a consistently fast and user-friendly experience. The caches are designed to:

- Ensure that all AMP pages are actually valid AMP.

- Allow AMP pages to be preloaded efficiently and safely.

- Do a myriad of additional user-beneficial performance optimizations to content.

But first:

The Basics: Analytics attribution and link sharing

Even though the AMP Cache model doesn’t follow the origin model (serving your page from your own domain), we attribute all traffic to you, the publisher. Through the <amp-analytics> tag, AMP supports a growing number of analytics providers (26 to date and growing!), to make sure you can measure your success and the traffic is correctly attributed.

When I ask users and developers about why they want to “click-through” to the canonical page from a cached AMP result, the answer is often about link sharing. And granted, it’s annoying to copy a google.com URL instead of the canonical URL. However, the issue isn’t as large of a problem as you’d think: Google amends its cached AMP search results with Schema.org and OpenGraph metadata, so posting the link to any platform that honors these should result in the canonical URL being shared. That being said, there are more opportunities to improve the sharing flow. In native web-views, one could share the canonical directly if the app supports it, and, based on users’ feedback, the team at Google is working on enabling easy access to the canonical URL on all its surfaces.

With these cleared up, let’s dig a little deeper.

When the label says AMP, you get AMP

The AMP Project consists of an ecosystem that depends on strict validation, ensuring that very high performance and quality bars are met. One version of a validator can be used during development, but the AMP Cache ensures the validity at the last stage, when presenting content to the user.

When an AMP page is requested from an AMP Cache for the first time, said cache validates the document first, and won’t offer it to the user if it detects problems. Platforms integrating with AMP (e.g. Bing, Pinterest, Google) can choose to send traffic directly to the AMP page on the origin or optionally to an AMP Cache, but validity can only be guaranteed when served from the cache. It ensures that when users see the AMP label, they’ll almost always get a fast and user friendly experience. (Unless you find a way to make a slow-but-valid AMP page, which is hard, but not impossible… I’m looking at you, big web fonts).

Pre-rendering is a bigger deal than you think

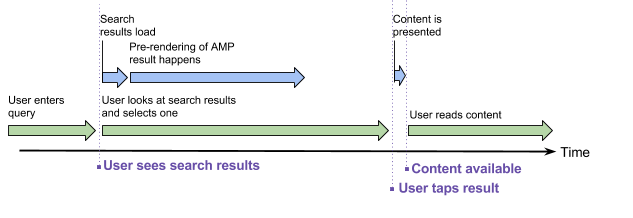

If you take anything away from this post, it’s that pre-rendering, especially the variant in AMP, greatly outweighs any speed gains you could theoretically get by hosting directly from an origin server. Even if the origin server is closer to your users, which would shave off a few milliseconds — rare but possible — pre-rendering will almost certainly drive the most impact.

Perceived as much faster

In fact, pre-rendering can often save you seconds, not milliseconds. The impact of pre-rendering, as opposed to the various other performance optimizations in the AMP JS library, can be pretty dramatic, and contributes largely to the “instant-feel” experience.

Very efficient compared to full pre-rendering

If that was the whole story, we could just as easily pre-render AMP pages from their origin servers. If we did, we couldn’t guarantee that a page is valid AMP on the origin, and valid AMP is critically important for the custom pre-rendering the AMP JS library provides: Pre-rendering in AMP, as opposed to just pre-rendering an entire page through something like link prefetching, also limits the use of the users’ bandwidth, CPU and battery!

Valid AMP documents behave “cooperatively” during the pre-render stage: Only assets in the first viewport get preloaded, and no third-party scripts get executed. This results in a much cheaper, less bandwidth and CPU-intensive preload, allowing platforms to prerender not just the first, but a few of the AMP pages a user will likely click on.

Safe to embed

Because AMP Caches can’t rely on browser pre-rendering (see the section above), normal navigation from page to page doesn’t work. So in the AMP caching model, a page needs to be opened inline on a platform page. AMP Caches ensure that the requested AMP page can do that safely:

- Validator ensures no Cross-Site Scripting (XSS) in main document.

- On top of the validator, the AMP Cache parses and then re-serializes the document in an unambiguous fashion (this means that it does not rely on HTML5 error correction). This ensures that browser parsing bugs and differences cannot lead to XSS.

- The cache applies a Content Security Policy (CSP). This provides additional defense-in-depth against XSS attacks.

Additional privacy

In addition, the AMP Caches remove one important potential privacy issue from the pre-render: When you do a search on a content platform preloading AMP pages on the result page, none of the preloaded AMP pages will ever know about the fact that they’ve been preloaded.

Think about it this way: Say I search for “breakfast burrito”. If you know me well, you know I obviously searched for Parry Gripp’s song with the same name. But the search result page also shows me a couple of AMP search results from fast food chains that sell actual breakfast burritos. For the next month, I wouldn’t want to see actual breakfast burritos everywhere even though I didn’t click on these links (even though…maybe I do…mhh..), and an advertiser wouldn’t want to waste dollars on me for pointless re-marketing ads on all the burritos. Since AMP hides the preload from the publisher of the AMP page and related third parties, it’s a win win scenario for users and advertisers.

Auto-optimizations that often result in dramatic speed increase

The AMP Cache started out with all of the above, but has since added a number of transformative transformations (heh) to its feature roster. Among those optimizations:

- Consistent, fast and free content delivery network for all content (not just big publishers).

- Optimizes HTML through measures such as bringing scripts into the ideal order, removing duplicate script tags and removing unnecessary quotes and whitespace.

- Rewrites JavaScript URLs to have infinite cache time.

- Optimizes images (a 40% average bandwidth improvement!)

On the image compression side alone, Google, through its cache, is doing lossless (without any visual change, e.g. removes EXIF data) and lossy (without noticeable visual change) compression. In addition, it converts images to WebP for browsers that support it and automatically generates srcset attributes (so-called responsive images) if they’re not already available, generating and showing correctly sized images to each device.

Isn’t there a better way of doing this?

Look, I hear you. The provider of an AMP Cache is mirroring your content. It’s an important role and comes with great responsibility. If the cache provider were to do something truly stupid, like inserting obnoxious ads into every AMP page, AMP would stop being a viable solution for publishers, and thus wither away.

Remember, AMP has been created together with publishers, as a means to make the mobile web better for publishers, users and platforms. It’s why the AMP team has released strict guidelines for AMP Caches. To give you two interesting excerpts, the guidelines state that your content needs to provide “a faithful visual and UX reproduction of source document”, and cache providers must pledge that they will keep URLs working indefinitely, even after the cache itself may be decommissioned. These, and many more rules, ensure that a cache doesn’t mess with your content.

Most importantly, there’s plenty of room for more than one AMP Cache – in fact, Cloudflare just announced their own! With these AMP Cache guidelines released, other infrastructure companies are welcome to create new AMP Caches, as long as they follow the rules. It’s then up to the platform integrating AMP to pick their favorite cache.

From cache to web standards?

You just read about all the wins and trade-offs the AMP Caches do to provide an instant-feeling, and user friendly mobile web experience. What if we could get to many of the same awesome optimizations without the trade-offs, and without involving a cache at all?

Personally, I dream of future, still-to-be-invented web standards that would allow us to get there – to move beyond cache models (like a static layout system to know how a page will look like before any assets are loaded).

In 2016, we’ve done our first baby steps with the CPP, which turned into the Feature Policy: A way of saying things like “I disallow document.write on my site, and any third parties in any iframes that get loaded”. More advanced concepts like static layouting and safe prerendering require far-fetching changes to the web platform, but hey – just like forward time travel, it’s not impossible, just very, very difficult 🙂

Join me in figuring this out by getting in touch on Twitter or Slack, and know that I’ll always have an open ear for your questions, ideas and concerns. Onwards!

Posted by Paul Bakaus, AMP Developer Advocate, Google